Thursday, November 28, 2013

Wednesday, November 27, 2013

At the Closing of an Age

Last week’s post here on The Archdruid Report suggested that the normal aftermath of an age of reason is a return to religion—in Spengler’s terms, a Second Religiosity—as the only effective bulwark against the nihilistic spiral set in motion by the barbarism of reflection. Yes, I’m aware that that’s a controversial claim, not least because so many devout believers in the contemporary cult of progress insist so loudly on seeing all religions but theirs as so many outworn relics of the superstitious past. This is’s a common sentiment among rationalists in every civilization, especially in the twilight years of ages of reason, and it tends to remain popular right up until the Second Religiosity goes mainstream and leaves the rationalists sitting in the dust wondering what happened.

I’d like to suggest that we’re on the brink of a similar transformation in the modern industrial world. The question that comes first to many minds when that suggestion gets made, though, is what religion or religions are most likely to provide the frame around which a contemporary Second Religiosity will take shape. It’s a reasonable question, but for several reasons it’s remarkably hard to answer.

The first and broadest reason for the difficulty is that the overall shape of a civilization’s history may be determined by laws of historical change, but the details are not. It was as certain as anything can be that some nation or other was going to replace Britain as global superpower when the British Empire ran itself into the ground in the early twentieth century. That it turned out to be the United States, though, was the result of chains of happenstance and choices of individual people going back to the eighteenth century if not furthr. If Britain had conciliated the American colonists before 1776, for example, as it later did in Australia and elsewhere, what is now the United States would have remained an agrarian colony dependent on British industry, there would have been no American industrial and military colossus to come to Britain’s rescue in 1917 and 1942, and we would all quite likely be speaking German today as we prepared to celebrate the birthday of Emperor Wilhelm VI.

In the same way, that some religion will become the focus of the Second Religiosity in any particular culture is a given; which religion it will be, though, is a matter of happenstance and the choices of individuals. It’s possible that an astute Roman with a sufficiently keen historical sense could have looked over the failing rationalisms of his world in the second century CE and guessed that one or another religion from what we call the Middle East would be most likely to replace the traditional cults of the Roman gods, but which one? Guessing that would, I think, have been beyond anyone’s powers; had the Emperor Julian lived long enough to complete his religious counterrevolution, for that matter, a resurgent Paganism might have become the vehicle for the Roman Second Religiosity, and Constantine might have had no more influence on later religious history than his predecessor Heliogabalus.

The sheer contingency of historical change forms one obstacle in the way of prediction, then. Another factor comes from a distinctive rhythm that shapes the history of popular religion in American culture. From colonial times on, American pop spiritualities have had a normal life cycle of between thirty and forty years. After a formative period of varying length, they grab the limelight, go through predictable changes over the standard three- to four-decade span, and then either crash and burn in some colorful manner or fade quietly away. What makes this particularly interesting is that there’s quite a bit of synchronization involved; in any given decade, that is, the pop spiritualities then in the public eye will all be going through a similar stage in their life cycles.

The late 1970s, for example, saw the simultaneous emergence of four popular movements of this kind: Protestant fundamentalism, Neopaganism, the New Age, and the evangelical atheist materialism of the so-called Sceptic movement. In 1970, none of those movements had any public presence worth noticing: fundamentalism was widely dismissed as a has-been phenomenon that hadn’t shown any vitality since the 1920s, the term “Neopagan” was mostly used by literary critics talking about an assortment of dead British poets, the fusion of surviving fragments of 1920s New Thought and Theosophy with the UFO scene that would give rise to the New Age was still out on the furthest edge of fringe culture, and the most popular and visible figures in the scientific communtiy were more interested in studying parapsychology and Asian mysticism than in denouncing them.

The pop spiritualities that were on their way out in 1970, in turn, had emerged together in the wake of the Great Depression, and replaced another set that came of age around 1900. That quasi-generational rhythm has to be kept in mind when making predictions about American pop religious movements, because very often, whatever’s biggest, strongest, and most enthusiastically claiming respectability at any given time will soon be heading back out to the fringes or plunging into oblivion. It may return after another three or four decades—Protestant fundamentalism had its first run from just before 1900 to the immediate aftermath of the 1929 stock market crash, for example, and then returned for a second pass in the late 1970s—and a movement that survives a few such cycles may well be able to establish itself over the long term as a successful denomination. Even if it does accomplish this, though, it’s likely to find itself gaining and losing membership and influence over the same cycle thereafter.

The stage of the cycle we’re in right now, as suggested above, is the one in which established pop spiritualities head for the fringes or the compost heap, and new movements vie for the opportunity to take their places. Which movements are likely to replace fundamentalism, Neopaganism, the New Age and today’s “angry atheists” as they sunset out? Once again, that depends on happenstance and individual choices, and so is far from easy to predict in advance. There are certain regularities: for example, liberal and conservative Christian denominations take turns in the limelight, so it’s fairly likely that the next major wave of American Christianity will be aligned with liberal causes—though it’s anyone’s guess which denominations will take the lead here, and which will remain mired in the fashionable agnosticism and the entirely social and secular understanding of religion that’s made so many liberal churches so irrelevant to the religious needs of their potential congregations.

In much the same way, American scientific institutions alternate between openness to spirituality and violent rejection of it. The era of the American Society for Psychical Research was followed by that of the war against the Spiritualists, that gave way to a postwar era in which physicists read Jung and the Tao Te Ching and physicians interested themselves in alternative medicine, and that was followed in turn by the era of the Committee for Scientific Investigation of Claims of the Paranormal and today’s strident evangelical atheism; a turn back toward openness is thus probably likely in the decades ahead. Still, those are probabilities, not certainties, and many other aspects of American religious pop culture are a good deal less subject to repeating patterns of this kind.

All this puts up serious barriers to guessing the shape of the Second Religiosity as that takes shape in deindustrializing America, and I’m not even going to try to sort out the broader religious future of the rest of today’s industrial world—that would take a level of familiarity with local religious traditions, cultural cycles, and collective thinking that I simply don’t have. Here in the United States, it’s hard enough to see past the large but faltering pop spiritual movements of the current cycle, guess at what might replace them, and try to anticipate which of them might succeed in meeting the two requirements I mentioned at the end of last week’s post, which the core tradition or traditions of our approaching Second Religiosity must have: the capacity to make the transition from the religious sensibility of the past to the religious sensibility that’s replacing it, and a willingness to abandon the institutional support of the existing order of society and stand apart from the economic benefits as well as the values of a dying civilization.

Both of those are real challenges. The religious sensibility fading out around us has for its cornerstone the insistence that humanity stands apart from nature and deserves some better world than the one in which we find ourselves. The pervasive biophobia of that sensibility, its obsession with imagery of risingup from the earth's surface, and most of its other features unfold from a basic conviction that, to borrow a phrase from one currently popular denomination of progress worshippers, humanity is only temporarily “stuck on this rock”—the “rock” in question, of course, being the living Earth in all her beauty and grandeur—and will be heading for something bigger, better, and a good deal less biological just as soon as God or technology or some other allegedly beneficent power gets around to rescuing us.

This is exactly what the rising religious sensibility of our age rejects. More and more often these days, as I’ve mentioned in previous posts, I encounter people for whom “this rock” is not a prison, a place of exile, a cradle, or even a home, but the whole of which human beings are an inextricable part. These people aren’t looking for salvation, at least in the sense that word has been given in the religious sensibility of the last two millennia or so, and which was adopted from that sensibility by the theist and civil religions of the Western world during that time; they are not pounding on the doors of the human condition, trying to get out, or consoling themselves with the belief that sooner or later someone or something is going to rescue them from the supposedly horrible burden of having bodies that pass through the extraordinary journey of ripening toward death that we call life.

They are seeking, many of these people. They are not satisfied with who they are or how they relate to the cosmos, and so they have needs that a religion can meet, but what they are seeking is wholeness within a greater whole, a sense of connection and community that embraces not only other people but the entire universe around them, and the creative power or powers that move through that universe and sustain its being and theirs. Many of them are comfortable with their own mortality and at ease with what Christian theologians call humanity’s “creaturely status,” the finite and dependent nature of our existence; what troubles them is not the inevitability of death or the reality of limits, but a lack of felt connection with the cosmos and with the whole systems that sustain their lives.

I suspect, to return to a metaphor I used in an earlier post here, that this rising sensibility is one of the factors that made the recent movie Gravity so wildly popular. The entire plot of the film centers on Sandra Bullock’s struggle to escape from the lifeless and lethal vacuum of space and find a way back to the one place in the solar system where human beings actually belong. To judge by the emails and letters I receive and the conversations I have, that’s a struggle with which many people in today’s industrial world can readily identify. The void scattered with sharp-edged debris they sense around them is more metaphorical than the one Bullock’s character has to face, but it’s no less real for that.

Can the traditions of the current religious mainstream or its established rivals speak to such people? Yes, though it’s going to take some significant rethinking of habitual language and practice to shake off the legacies of the old religious sensibility and find ways to address the needs and possibilities of the new one. It’s entirely possible that one or another denomination of Christianity might do that. It’s at least as possible that one or another denomination of Buddhism, the most solidly established of the current crop of imported faiths, could do it instead. Still, the jury’s still out.

The second requirement for a successful response to the challenge of the Second Religiosity bears down with particular force against these and other established religious institutions. Most American denominations of Christianity and Buddhism alike, for example, have a great deal of expensive infrastructure to support—churches and related institutions in the case of Christianity; monasteries, temples, and retreat centers in the case of Buddhism—and most of the successful denominations of both faiths, in order to pay for these things, have by and large taken up the same strategy of pandering to the privileged classes of American society. That’s a highly successful approach in the short term, but the emergence of a Second Religiosity is not a short term phenomenon; those religious movements that tie themselves too tightly to middle or upper middle class audiences are likely to find, as the floodwaters of change rise, that they’ve lashed themselves to a stone and will sink along with it.

In an age of decline, religious institutions that have heavy financial commitments usually end up in deep trouble, and those that depend on support from the upper reaches of the social pyramid usually land in deeper trouble still. It’s those traditions that can handle poverty without blinking that are best able to maintain themselves in hard times, just as it’s usually those same traditions that an increasingly impoverished society finds most congenial and easiest to support. Christianity in the late Roman world was primarily a religion of the urban poor, with a modest sprinkling of downwardly mobile middle-class intellectuals in their midst; Christianity in the Dark Ages was typified by monastic establishments whose members were even poorer than the impoverished peasants around them. Buddhism was founded by a prince but very quickly learned that absolute non-attachment to material wealth was not only a spiritual virtue but a very effective practical strategy.

In both cases, though, that was a long time ago, and most American forms of both religions—and most others, for that matter—are heavily dependent on access to middle- and upper middle-class parishioners and their funds. If that continues, it’s likely to leave the field wide open to the religions of the poor, to new religious movements that grasp the necessity of shoestring budgets and very modest lifestyles, or to further imports from abroad that retain Third World attitudes toward wealth.

I’m often asked in this context about the possibility that Druidry, the faith tradition I practice, might end up filling a core role in the Second Religiosity of industrial civilization. It’s true that we embraced the new religious sensibility long before it was popular elsewhere, and equally true that shoestring budgets and unpaid clergy are pretty much universal in Druid practice. Still, the only way I can see Druidry becoming a major factor in the deindustrial age is if every other faith falls flat on its nose; we have a strike against us that most other religious movements don’t have.

No, I don’t mean the accelerating decline of today’s pop Neopaganism. Old-fashioned Druid orders such as AODA, the order I head, routinely get confused with the Neopagan scene these days, but we were around long before Neopaganism began to take shape in the late 1970s—AODA was chartered in 1912, and traces its roots back to the eighteenth century—and we expect to be around long after it has cycled back out of fashion. If anything, the volunteer staff who handles AODA’s correspondence will be grateful for fewer emails saying, “Hi, I want to know if you have a grove in my area I can circle with on the Sabbats—Blessed be!” and thus less need for return emails explaining that we aren’t Wiccans and don’t celebrate the Sabbats, and that our groves and study groups are there to provide support for our initiates, not to put on ceremonies for casual attendees.

That is to say, AODA is an initiatory order, not a church in the doors-wide-open sense of the word, and that’s the strike against us mentioned above. I suspect most of my readers will have little if any notion of the quiet subculture of initiatory orders in the modern industrial world. There are a great many of them, mostly quite small, offering instruction in meditation, ritual, and a range of other transformative practices to those interested in such things. Initiatory orders in the Western world have usually been independent of public religious institutions—this was also true in classical times, when the Dionysian and Orphic mysteries, the Pythagorean Brotherhood, and later on the Neoplatonists and Gnostic sects filled much the same role we do today—while those in Asian countries are usually affiliated with the religious mainstream. In traditional Japan, for example, people interested in the sort of thing initiatory orders do could readily find their way to esoteric schools of Buddhism, such as the Shingon sect; this side of the Ganges, by contrast, attitudes of the religious mainstream toward such traditions have tended to veer from toleration through disapproval to violent persecution and back again.

Eccentric as it is, the world of initiatory orders has been my spiritual home since I got dissatisfied with the casual irreligion of my birth family and went looking for something that made more sense. A book I published last year tried to sum up some of what that world and its teachings have to say concerning the age of limits now upon us, and it had a modest success. Still, one thing all of us in the initiatory orders learn early on is that our work is something that appeals only to the few. Self-unfoldment through disciplines of realization, to borrow a crisp definition from what was once a widely read book on the subject, involves a great deal of hard and unromantic work on the self. For those of us who are called to it, there’s nothing more rewarding—but not that many people are called to it.

Monday, November 25, 2013

The World Falls Away From Itself

If you promise that you'll save the world, people will believe you. And when you don't, people will forget what you promised.

Thursday, November 21, 2013

The Language Breaks

You've got such beautiful words but none I can eat, none which block the rain, none which bandage my wounds, none which build a home.

Nothing beautiful, which did not work, ever became anything more than pretty.

Nothing beautiful, which did not work, ever became anything more than pretty.

Wednesday, November 20, 2013

Toward a Green Future, Part Three: The Barbarism of Reflection

One of the least helpful habits of modern thinking is the common but really rather weird insistence that if something doesn’t belong all the way to one side of a distinction, it must go all the way to the other side. Think about the way that the popular imagination flattens out the futures open to industrial society into a forced choice between progress and catastrophe, or the way that American political rhetoric can’t get past the notion that the United States must be either the best nation on earth or the worst: in both these cases and many more like them, a broad and fluid continuum of actual possibilities has been replaced by an imaginary opposition between two equally imaginary extremes.

The discussion of the different kinds of thinking in last week’s Archdruid Report post brushed up against another subject that attracts this sort of obsessive binary thinking, because it touched on the limitations of the human mind. Modern industrial culture has a hard time dealing with limits of any lind, but the limitations that most reliably give it hiccups are the ones hardwired into the ramshackle combination of neurology and mentality we use to think with. How often, dear reader, have you heard someone claim that there are no limits to the power of human thought—or, alternatively, that human thought can be dismissed as blind stumbling through some set of biologically preprogrammed routines?

A more balanced and less binary approach allows human intelligence to be seen as a remarkable but fragile capacity, recently acquired in the evolutionary history of our species, and still full of bugs that the remorseless beta testing of natural selection hasn’t yet had time to find and fix. The three kinds of thinking I discussed in last week’s post—figuration, abstraction, and reflection—are at different stages in that Darwinian process, and a good many of the challenges of being human unfold from the complex interactions of older and more reliable kinds of thinking with newer and less reliable ones.

Figuration is the oldest kind of thinking, as well as the most basic. Students of animal behavior have shown conclusively that animals assemble the fragmentary data received by their senses into a coherent world in much the same way that human beings do, and assemble their figurations into sequences that allow them to make predictions and plan their actions. Abstraction seems to have come into the picture with spoken language; the process by which a set of similar figurations (this poodle, that beagle, the spaniel over there) get assigned to a common category with a verbal label, “dog,” is a core example of abstract thinking as well as the foundation for all further abstraction.

It’s interesting to note, though, that figuration doesn’t seem to produce its most distinctive human product, narrative, until the basic verbal tools of abstraction show up to help it out. In the same way, abstraction doesn’t seem to get to work crafting theories about the cosmos until reflection comes into play. That seems to happen historically about the same time that writing becomes common, though it’s an open question whether one of these causes the other or whether both emerge from deeper sources. While human beings in all societies and ages are capable of reflection, the habit of sustained reflection on the figurations and abstractions handed down from the past seems to be limited to complex societies in which literacy isn’t restricted to a small religious or political elite.

An earlier post in this sequence talked about what happens next, though I used a different terminology there—specifically, the terms introduced by Oswald Spengler in his exploration of the cycles of human history. The same historical phenomena, though, lend themselves at least as well to understanding in terms of the modes of thinking I’ve discussed here, and I want to go back over the cycle here, with a close eye on the way that figuration, abstraction, and reflection shape the historical process.

Complex literate societies aren’t born complex and literate, even if history has given them the raw materials to reach that status in time. They normally take shape in the smoking ruins of some dead civilization, and their first centuries are devoted to the hard work of clearning away the intellectual and material wreckage left behind. Over time, barbarian warlords and warbands settle down and become the seeds of a nascent feudalism, religious institutions take shape, myths and epics are told and retold: all these are tasks of the figurative stage of thinking, in which telling stories that organize the world of human experience into meaningful shapes is the most important task, and no one worries too much about whether the stories are consistent with each other or make any kind of logical sense.

It’s after the hard work of the figurative stage has been accomplished, and the cosmos has been given an order that fits comfortably within the religious sensibility and cultural habits of the age, that people have the leisure to take a second look at the political institutions, the religious practices, and the stories that explain their world to them, and start to wonder whether they actually make sense. That isn’t a fast process, and it usually takes some centuries either to create a set of logical tools or to adapt one from some older civilization so that the work can be done. The inevitable result is that the figurations of traditional culture are weighed in the new balances of rational abstraction and found wanting.

Thus the thinkers of the newborn age of reason examine the Bible, the poems of Homer, or whatever other collection of mythic narratives has been handed down to them from the figurative stage, and discover that it doesn’t make a good textbook of geology, morality, or whatever other subject comes first in the rationalist agenda of the time. Now of course nobody in the figurative period thought that their sacred text was anything of the kind, but the collective shift from figuration to abstraction involves a shift in the meaning of basic concepts such as truth. To a figurative thinker, a narrative is true because it works—it makes the world make intuitive sense and fosters human values in hard times; to an abstractive thinker, a theory is true because it’s consistent with some set of rules that have been developed to sort out true claims from false ones.

That’s not necessarily as obvious an improvement as it seems at first glance. To begin with, of course, it’s by no means certain that knowing the truth about the universe is a good thing in terms of any other human value; for all we know, H.P. Lovecraft may have been quite correct to suggest that if we actually understood the nature of the cosmos in which we live, we would all imitate the hapless Arthur Jermyn, douse ourselves with oil, and reach for the matches. Still, there’s another difficulty with rationalism: it leads people to believe that abstract concepts are more real than the figurations and raw sensations on which they’re based, and that belief doesn’t happen to be true.

Abstract concepts are simply mental models that more or less sum up certain characteristics of certain figurations in the universe of our experience. They aren’t the objective realities they seek to explain. The laws of nature so eagerly pursued by scientists, for example, are generalizations that explain how certain quantifiable measurements are likely to change when something happens in a certain context, and that’s all they are. It seems to be an inevitable habit of rationalists, though, to lose track of this crucial point, and convince themselves that their abstractions are more real than the raw sensory data on which they’re based—that the abstractions are the truth, in fact, behind the world of appearances we experience around us. It’s wholly reasonable to suppose that there is a reality behind the world of appearances, to be sure,but the problem comes in with the assumption that a favored set of abstract concepts is that reality, rather than merely a second- or thirdhand reflection of it in the less than flawless mirror of the human mind.

The laws of nature make a good example of this mistake in practice. To begin with, of course, the entire concept of “laws of nature” is a medieval Christian religious metaphor with the serial numbers filed off, ultimately derived from the notion of God as a feudal monarch promulgating laws for all his subjects to follow. We don’t actually know that nature has laws in any meaningful sense of the word—she could simply have habits or tendencies—but the concept of natural law is hardwired into the structure of contemporary science and forms a core presupposition that few ever think to question.

Treated purely as a heuristic, a mental tool that fosters exploration, the concept of natural law has proven to be very valuable. The difficulty creeps in when natural laws are treated, not as useful summaries of regularities in the world of experience, but as the realities of which the world of experience is a confused and imprecise reflection. It’s this latter sort of thinking that drives the insistence, very common in some branches of science, that a repeatedly observed and documented phenomenon can’t possibly have taken place, because the existing body of theory provides no known mechanism capable of causing it. The ongoing squabbles over acupuncture are one example out of many: Western medical scientists don’t yet have an explanation for how it functions, and for this reason many physicians dismiss the reams of experimental evidence and centuries of clinical experience supporting acupuncture and insist that it can’t possibly work.

That’s the kind of difficulty that lands rationalists in the trap discussed in last week’s post, and turns ages of reason into ages of unreason: eras in which all collective action is based on some set of universally accepted, mutually supporting, logically arranged beliefs about the cosmos that somehow fail to make room for the most crucial issues of the time. It’s among history’s richer ironies that the beliefs central to ages of unreason so consistently end up clustering around a civil religion—that is, a figurative narrative that’s no more subject to logical analysis than the theist religions it replaced, and which is treated as self-evidently rational and true precisely because it can’t stand up to any sort of rational test, but which provides a foundation for collective thought and action that can’t be supplied in any other way—and that it’s usually the core beliefs of the civil religion that turn out to be the Achilles’ heel of the entire system.

All of the abstract conceptions of classical Roman culture thus came to cluster around the civil religion of the Empire, a narrative that defined the cosmos in terms of a benevolent despot’s transformation of primal chaos into a well-ordered community of hierarchically ranked powers. Jove’s role in the cosmos, the Emperor’s role in the community, the father’s role in the family, reason’s role in the individual—all these mirrored one another, and provided the core narrative around which all the cultural achievements of classical society assembled themselves. The difficulty, of course, was that in crucial ways, the cosmos refused to behave according to the model, and the failure of the model cast everything else into confusion. In the same way, the abstract conceptions of contemporary industrial culture have become dependent on the civil religion of progress, and are at least as vulnerable to the spreading failure of that secular faith to deal with a world in which progress is rapidly becoming a thing of the past.

It’s here that reflection, the third mode of thinking discussed in last week’s post, takes over the historical process. Reflection, thinking about thinking, is the most recent of the modes and the least thoroughly debugged. During most phases of the historical cycle, it plays only a modest part, because its vagaries are held in check either by traditional religious figurations or by rational abstractions. Many religious traditions, in fact, teach their followers to practice reflection using formal meditation exercises; most rationalist traditions do the same thing in a somewhat less formalized way; both are wise to do so, since reflection limited by some set of firmly accepted beliefs is an extraordinarily powerful way of educating and maturing the mind and personality.

The trouble with reflection is that thinking about thinking, without the limits just named, quickly shows up the sharp limitations on the human mind mentioned earlier in this essay. It takes only a modest amount of sustained reflection to demonstrate that it’s not actually possible to be sure of anything, and that way lies nihilism, the conviction that nothing means anything at all. Abstractions subjected to sustained reflection promptly dissolve into an assortment of unrelated figurations; figurations subjected to the same process dissolve just as promptly into an assortment of unrelated sense data, given what unity they apparently possess by nothing more solid than the habits of the human nervous system and the individual mind. Jean-Paul Sartre’s fiction expressed the resulting dilemma memorably: given that it’s impossible to be certain of anything, how can you find a reason to do anything at all?

It’s not a minor point, nor one restricted to twentieth-century French intellectuals. Shatter the shared figurations and abstractions that provide a complex literate society with its basis for collective thought and action, and you’re left with a society in fragments, where biological drives and idiosyncratic personal agendas are the only motives left, and communication between divergent subcultures becomes impossible because there aren’t enough common meanings left to make that an option. The plunge into nihilism becomes almost impossible to avoid once abstraction runs into trouble on a collective scale, furthermore, because reflection is the automatic response to the failure of a society’s abstract representations of the cosmos. As it becomes painfully clear that the beliefs of the civil religion central to a society’s age of reason no longer correspond to the world of everyday experience, the obvious next step is to reflect on what went wrong and why, and away you go.

It’s probably necessary here to return to the first point raised in this week’s essay, and remind my readers that the fact that human thinking has certain predictable bugs in the programming, and tends to go haywire in certain standard ways, does not make human thinking useless or evil. We aren’t gods, disembodied bubbles of pure intellect, or anything else other than what we are: organic, biological, animal beings with a remarkable but not unlimited capacity for representing the universe around us in symbolic form and doing interesting things with the resulting symbols. Being what we are, we tend to run up against certain repetitive problems when we try to use our thinking to do things for which evolution did little to prepare it. It’s only the bizarre collective egotism of contemporary industrial culture that convinces so many people that we ought to be exempt from limits to our intelligence—a notion just as mistaken and unproductive as the claim that we’re exempt from limits in any other way.

Fortunately, there are also reliable patches for some of the more familiar bugs. It so happens, for example, that there’s one consistently effective way to short-circuit the plunge into nihilism and the psychological and social chaos that results from it. There may be more than one, but so far as I know, there’s only one that has a track record behind it, and it’s the same one that provides the core around which societies come together in the first place: the raw figurative narratives of religion. What Spengler called the Second Religiosity—the renewal of religion in the aftermath of an age of reason—thus emerges in every civilization’s late history as the answer to nihilism; what drives it is the failure of rationalism either to deal with the multiplying crises of a society in decline or to provide some alternative to the infinite regress of reflection run amok.

Religion can accomplish this because it has an answer to the nihilist’s claim that it’s impossible to prove the truth of any statement whatsoever. That answer is faith: the recognition, discussed in a previous post in this sequence, that some choices have to be made on the basis of values rather than facts, because the facts can’t be known for certain but a choice must be made anyway—and choosing not to choose is still a choice. Nihilism becomes self-canceling, after all, once reflection goes far enough to show that a belief in nihilism is just as arbitrary and unprovable as any other belief; that being the case, the figurations of a religious tradition are no more absurd than anything else, and provide a more reliable and proven basis for commitment and action than any other option.

The Second Religiosity may or may not involve a return to the beliefs central to the older age of faith. In recent millennia, far more often than not, it hasn’t been. As the Roman world came apart and the civil religion and abstract philosophies of the Roman world failed to provide any effective resistance to the corrosive skepticism and nihilism of the age, it wasn’t the old cults of the Roman gods who became the nucleus of a new religious vision, but new faiths imported from the Middle East, of which Christianity and Islam turned out to be the most enduring. Similarly, the implosion of Han dynasty China led not to a renewal of the traditional Chinese religion, but to the explosive spread of Buddhism and of the newly invented religious Taoism of Zhang Daoling and his successors. On the other side of the balance is the role played by Shinto, the oldest surviving stratum of Japanese religion, as a source of cultural stability all through Japan’s chaotic medieval era.

It’s still an open question whether the religious forms that will be central to the Second Religiosity of industrial civilization’s twilight years will be drawn from the existing religious mainstream of today’s Western societies, or whether they’re more likely to come either from the bumper crop of religious imports currently in circulation or from a even larger range of new religious movements contending for places in today’s spiritual marketplace. History strongly suggests, though, that whatever tradition or traditions break free from the pack to become the common currency of thought in the postrationalist West will have two significant factors in common with the core religious movements of equivalent stages in previous historical cycles. The first is the capacity to make the transition from the religious sensibility of the previous age of faith to the emerging religious sensibility of the time; the second is a willingness to abandon the institutional support of the existing order of society and stand apart from the economic benefits as well as the values of a dying civilization. Next week’s post will offer some personal reflections on how that might play out.

The Wind Blew The Light Away

The world didn't end in fire. It just blew away in the rain. And who can say anything at all.

Maybe, if you are anywhere at all, you can say to someone in Italy, Indiana or the Philippines, "Don't worry, we are on our way."

I will say "Just for now, it's ok to believe in ghosts."

Wednesday, November 13, 2013

Toward a Green Future, Part Two: The Age of Unreason

The biophobia discussed in last week’s post makes a useful example of the way that toxic ideas can have the same kind of impact on a society as toxic waste of a more material kind. This shouldn’t be a new point to any regular reader of this blog; I’ve commented in these essays rather more than once that the crisis of our age is not just a function of depleted resources and the buildup of pollutants in the biosphere, important as these details are. To a far more important degree, it’s a matter of depleted imaginations and the buildup of dysfunctional ideas in the collective consciousness of our time.

Most of the rising spiral of problems we face as the industrial age approaches its end could have been prevented with a little foresight and forbearance, and even now—when most of the opportunities to avoid a really messy future have long since gone whistling down the wind—there’s still much that could be done to mitigate the worst consequences of the decline and fall of the industrial age and pass on the best achievements of the last few centuries to our descendants. Of the things that could be done to make the future less miserable than it will otherwise be, though, very few are actually being done, and those have received what effort they have only because scattered individuals and small groups out on the fringes of contemporary industrial society are putting their own time and resources into the task.

Meanwhile the billions of dollars, the vast public relations campaigns, and the lavishly supplied and funded institutional networks that could do these same things on a much larger scale are by and large devoted to projects that are simply going to make things worse. That’s the bitter irony of our age and, more broadly, of every civilization in its failing years. No society has to be dragged kicking and screaming down the slope of decline and fall; one and all, they take that slope at a run, yelling in triumph, utterly convinced that the road to imminent ruin will lead them to paradise on Earth.

That’s one of the ways that the universe likes to blindside believers in a purely materialist interpretation of history. Modern industrial society differs in a galaxy of ways from the societies that preceded it into history’s compost heap, and it’s easy enough—especially in a society obsessed with the belief in its superiority to every other civilization in history—to jump from the fact of those differences to the conviction that modern industrial society must have a unique fate: uniquely glorious, uniquely horrible, or some combination of the two, nobody seems to care much as long as it’s unique. Then the most modern industrial social and economic machinery gets put to work in the service of stupidities that were old before the pyramids were built, because human beings rather than machinery make the decisions, and the motives that drive human behavior don’t actually change that much from one millennium to the next.

What does change from millennium to millennium, and across much shorter eras as well, are the ideas and beliefs built atop the foundation provided by the motivations just mentioned. One of the historians whose work has been central to this blog’s project, Oswald Spengler, had much to say about the way that different high cultures came to understand the world in such dramatically different ways. He pointed out that the most basic conceptions about reality vary from one culture to another. Modern Western thinkers can’t even begin to understand the world, for example, without the conception of infinite empty space; to the thinkers of Greek and Roman times, by contrast, infinity and empty space were logical impossibilities, and the cosmos was—had to be—a single material mass.

Still, there’s another dimension to the way thoughts and beliefs change over time, and it takes place within the historical trajectory of what Spengler called a culture and his great rival Arnold Toynbee called a civilization. This is the dimension that we recall, however dimly, when we speak of the Age of Faith and the Age of Reason. Since these same ages recur in the life of every high culture, we might more usefully speak of ages of faith and ages of reason in the plural; we might also want to discuss a third set of ages, to which I gave a suggestive name in a post a while back, that succeed ages of reason in much the same way that the latter supplant ages of faith.

Now of course the transition between ages of faith and ages of reason carries a heavy load of self-serving cant these days. The rhetoric of the civil religion of progress presupposes that every human being who lived before the scientific revolution was basically just plain stupid, since otherwise they would have gotten around to noticing centuries ago that modern atheism and scientific materialism are the only reasonable explanations of the cosmos. Thus a great deal of effort has been expended over the years on creative attempts to explain why nobody before 1650 or so realized that everything they believed about the cosmos was so obviously wrong.

A more useful perspective comes out of the work of Giambattista Vico, the modern Western world’s first great theorist of historical cycles. Vico’s 1744 opus Principles of the New Science Concerning the Common Nature of Nations—for obvious reasons, the title normally gets cut down nowadays to The New Science—focuses on what he called “the course the nations run,” the trajectory that leads a new civilization up out of one dark age and eventually back down into the next. Vico wrote in an intellectual tradition that’s all but extinct these days, the tradition of Renaissance humanism, saturated in ancient Greek and Roman literature and wholly at ease in the nearly forgotten worlds of mythological and allegorical thinking. Even at the time, his book was considered difficult by most readers, and it’s far more opaque today than it was then, but the core ideas Vico was trying to communicate are worth teasing out of his convoluted prose.

One useful way into those ideas is to start where Vico did, with the history of law. It’s a curious regularity in legal history that law codes start out in dark age settings as lists of specific punishments for specific crimes—“if a man steal a loaf of bread, let him be given twelve blows with a birch stick”—without a trace of legal theory or even generalization. Later, all the different kinds of stealing get lumped together as the crime of theft, and the punishment assigned to it usually comes to depend at least in part on the abstract value of what’s stolen. Eventually laws are ordered and systematized, a theory of law emerges, and great legal codes are issued providing broad general principles from which jurists extract rulings for specific cases. By that time, the civilization that created the legal code is usually stumbling toward its end, and its fall is the end of the road for its highly abstract legal system; when the rubble stops bouncing, the law codes of the first generation of successor states go right back to lists of specific punishments for specific crimes. As Vico pointed out, the oldest form of Roman law, the Twelve Tables, and the barbarian law codes that emerged after Rome’s fall were equally concrete and unsystematic, even though the legal system that rose and fell between them was one of history’s great examples of legal systematization and abstraction.

What caught Vico’s attention is that the same process appears in a galaxy of other human institutions and activities. Languages emerge in dark age conditions with vocabularies rich in concrete, sensuous words and very poor in abstractions, and and transform those concrete words into broader, more general terms over time—how many people remember that “understand” used to mean to stand under, in the sense of getting in underneath to see how something works? Political systems start with the intensely personal and concrete feudal bonds between liege lord and vassal, and then shift gradually toward ever more abstract and systematic notions of citizenship. Vico barely mentioned economics in his book, but it’s a prime example: look at the way that wealth in a dark age society means land, grain, and lumps of gold, which get replaced first by coinage, then by paper money, then by various kinds of paper that can be exchanged for paper money, and eventually by the electronic hallucinations that count as wealth today.

What’s behind these changes is a shift in the way that thinking is done, and it’s helpful in this regard to go a little deeper than Vico himself did, and remember that the word “thinking” can refer to at least three different kinds of mental activity. The first is so pervasive and so automatic that most people only notice it in unusual situations—when you wake up in the dark in an unfamiliar room, for example, and it takes several seconds for the vague looming shapes around you to turn into furniture. Your mind has to turn the input of your senses into a world of recognizable objects. It does this all the time, though you don’t usually notice the process; the world you experience around you is literally being assembled by your mind moment by moment. We can borrow a term from Owen Barfield for the kind of thinking that does this, and call it figuration.

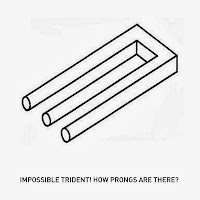

Figuration’s a more complex process than most people realize. If you look at the optical illusion shown below, you can watch that process at work: your mind tries to make sense of the shapes on the screen, and flops back and forth between the available options. If you look at an inkblot from the Rohrshach test and see two bats having a romantic interlude, that’s figuration, too, and it reveals one of the things that happens when figuration gets beyond the basics: it starts to tell stories. Listen to children who aren’t yet old enough to tackle logical reasoning, especially when they don’t know you’re listening, and you’ll often hear figuration in full roar: everything becomes part of a story, which may not make any sense at all from a logical perspective, but connects everything together in a single narrative that makes its own kind of sense of the world of experience.

It’s when children, or for that matter adults, start to compare figurations to each other that a second kind of thinking comes into play, which we can call abstraction. You have this figuration over here, which combines the sensations of brown, furry, movement, barking, and much more into a single object; you have that one over there, which includes most of the same sensations in a different place into a different object; from these figurations, you abstract the common features and give the sum of those features a name, “dog.” That’s abstraction. The child who calls every four-legged animal “goggie” has just started to grasp abstraction, and does it in the usual way, starting from the largest and broadest abstract categories and narrowing down from there. As she becomes more experienced at it, she’ll learn to relabel that abstraction “animal,” or even “quadruped,” while a cascade of nested categories allows her to grasp that Milo and Maru are both animals, but one is a dog and the other a cat.

Just as figuration allowed to run free starts to tell stories, abstraction allowed the same liberty starts to construct theories. A child who’s old enough to abstract but hasn’t yet passed to the third kind of thinking is a great example. Ask her to speculate about why something happens, and you’ll get a theory instead of a story—the difference is that, where a story simply flows from event to event, a theory tries to make sense of the things that happen by fitting them into abstract categories and making deductions on that basis. The categories may be inappropriately broad, narrow, or straight out of left field, and the deductions may be whimsical or just plain weird, but it’s from such tentative beginnings that logic and science gradually emerge in individuals and societies alike.

Figuration, then, assembles a world out of fragments of present and remembered sensation. Abstraction takes these figurations and sorts them into categories, then tries to relate the categories to one another. It’s when the life of abstraction becomes richly developed enough that there emerges a third kind of thinking, which we can call reflection. Reflection is thinking about thinking: stepping outside the world constructed by figuration to think about how figurations are created from raw sensation, stepping outside the cascading categories created by abstraction to think about where those categories came from and how well or poorly they fit the sensations and figurations they’re meant to categorize. Where figuration tells stories and abstraction creates theories, reflection can lead in several directions. Done capably, it yields wisdom; done clumsily, it plunges into self-referential tailchasing; pushed too far, it can all too easily end in madness.

Apply these three modes of thinking to the historical trajectory of any civilization and the parallels are hard to miss. Figuration dominates the centuries of a society’s emergence and early growth; language, law, and the other phenomena mentioned above focus on specific examples or, as we might as well say, specific figurations. The most common form of intellectual endeavor in such times is storytelling—it’s not an accident that the great epics of our species, and the vast majority of its mythologies, come out of the early stages of high cultures. If the logical method of a previous civilization has been preserved, which has been true often enough in recent millennia, it exists in a social bubble, cultivated by a handful of intellectuals but basically irrelevant to the conduct of affairs. Religion dominates cultural life, and feudalism or some very close equivalent dominates the political sphere.

It’s usually around the time that feudalism is replaced by some other system of government that the age of faith gives way to the first stirrings of an age of reason or, in the terms used here, abstraction takes center stage away from figuration. At first, the new abstraction sets itself the problem of figuring out what religious myths really mean, but since those narratives don’t “mean” anything in an abstract sense—they’re ways of assembling experience into stories the mind can grasp, not theories based on internally consistent categorization and logic—the myths eventually get denounced as a pack of lies, and the focus shifts to creating rational theories about the universe. Epic poetry and mythology give way to literature, religion loses ground to secular scholarship such as classical philosophy or modern science, and written constitutions and law codes replace feudal custom.

Partisans of abstraction always like to portray these shifts as the triumph of reason over superstition, but there’s another side to the story. Every abstraction is a simplification of reality, and the further you go up the ladder of abstractions, the more certain it becomes that the resulting concepts will fail to match up to the complexities of actual existence. Nor is abstraction as immune as its practitioners like to think to the influences of half-remembered religious narratives or the subterranean pressures of ordinary self interest—it’s utterly standard, for example, for the professional thinkers of an age of reason to prove with exquisite logic that whatever pays their salaries is highly reasonable and whatever threatens their status is superstitious nonsense. The result is that what starts out proclaiming itself as an age of reason normally turns into an age of unreason, dominated by a set of closely argued, utterly consistent, universally accepted rational beliefs whose only flaw is that they fail to explain the most critical aspects of what’s happening out there in the real world.

In case you haven’t noticed, dear reader, that’s more or less where we are today. It’s not merely that the government of every major industrial nation is trying to achieve economic growth by following policies that are supposed to bring growth in theory, but have never once done so in practice; it’s not merely that the populace of every major industrial society eagerly forgets all the lessons of each speculative bubble and bust as soon as the next one comes along, and makes all the same mistakes with the same dismal results as the previous time; it’s not even that allegedly sane and sensible people have somehow managed to convince themselves that limitless supplies of fossil fuels can somehow be extracted at ever-increasing rates from the insides of a finite planet: it’s that only a handful of people out on the furthest fringes of contemporary culture ever notice that there’s anything at all odd about these stunningly self-defeating patterns of behavior.

It’s at this stage of history that reflection becomes necessary. It’s only by thinking about thinking, by learning to pay attention to the way we transform the raw data of the senses into figurations and abstractions, that it becomes possible to notice what’s being excluded from awareness in the course of turning sensation into figurations and sorting out figurations into cascading levels of abstraction. Yes, that’s part of the project of this blog—to reflect on how we as a society got in the habit of thinking the things we think, and how well or poorly that thinking relates to the world we’re actually encountering.

It’s at this same stage of history, though, that reflection also becomes a lethal liability, because wisdom is not the only possible outcome of reflection. Vico points out that there’s a barbarism of reflection that comes at the end of a civilization’s life cycle, parallel to the barbarism of sensation that comes at the beginning—and also ancestral to it. Reflection is a solvent; skillfully handled, it dissolves abstractions and figurations that obscure more than they reveal, so that less counterproductive ways of assembling raw sensation into meaningful patterns can be pursued; run riot, it makes every abstraction and every figuration as arbitrary and meaningless as any other, until the collective conversation about what’s real and what matters dissolves in a cacophony of voices speaking past one another.

Wednesday, November 6, 2013

Toward a Green Future, Part One: The Culture of Biophobia

To understand the predicament of industrial civilization, it’s not enough to grasp the outward shape of the crisis of our time: the looting of a finite planet’s stock of resources, the destabilization of the global climate, the breathtaking cluelessness with which politicians, pundits, and ordinary citizens alike insist that the only way we can get out of this mess involves doing even more of the same things that got us into it in the first place, and the rest of it. Follow the roots of our predicament down into the soil that feeds them, and you’ll find yourself in a murky realm of unspoken narratives and unacknowledged desires—the “mind-forg’d manacles,” as Blake called them, that keep most of us shackled in place as the great rumbling vehicle of global industrial society accelerates down the slope of its decline and fall.

Over the last seven months, I’ve tried to open up the obscurities of that subterranean realm using the language of religion as a tool. This is far from the first time that I’ve discussed the religious dimension of our blind faith in perpetual progress in these essays, but it’s the first time I’ve done so at length, and the sheer intensity of the emotions roused on all sides of the discussion is to my mind a sign of just how important that dimension has become.

The distinction made in an earlier post between religions and religious sensibilities is crucial to making sense of all this. Most discussions of the interfaces between religion, ecology, and the future have missed this distinction, and focused either on specific religious traditions or on the vague abstraction of religion as a whole. The resulting debates were not especially useful to anybody.

A classic example is the furore kickstarted by the 1967 publication of Lynn White’s famous essay “The Historical Roots of Our Ecological Crisis.” White argued that the rise of Christianity to its dominant position in the religious life of the western world was an essential precondition for the environmental crisis of our time. The old polytheist religions of the west, in his analysis, saw nature as sacred, the abode of a galaxy of numinous powers that could not be ignored with impunity; Christianity, by contrast, brought with it an image of the world as a lifeless mass of matter, an artifact put there by God for the sole benefit of human beings during the relatively brief period between the creation of the world and the Second Coming, after which it would be replaced by a new and improved model. By stripping nature of any inherent claim to human reverence, he suggested, Christianity made it easier for post-Christian western humanity to treat the earth as a lump of rock with no value beyond its use as a source of raw materials or a dumping ground for waste.

The debate that followed the appearance of White’s paper followed a trajectory many of my readers will find familiar. Partisans of White’s view defended it by digging up examples from history in which Christianity had been used to justify the abuse of nature, and had no trouble finding a bumper crop of instances. Opponents of White’s view attacked it by showing that the abuse of nature was not actually justified within a Christian worldview, and by and large they had no trouble finding a bumper crop of good theological grounds for their case.

What’s more, both were right. On the one hand, there’s nothing in Christian theology that requires the abuse of nature, and a very strong case can be made, from within the context of Christian faith, for the preservation of the environment as an imperative duty. On the other, over the course of the last two thousand years, very few Christians anywhere have recognized that duty, and a great many have used (and continue to use) excuses drawn from their faith to justify their abuse of the environment.

Factor in the influence of religious sensibilities and the paradox evaporates. A religious sensibility, again, is not a religion; it’s the cultural substructure of perceptions, emotions and intuitions that shape the way religious traditions are understood and practiced within a given culture or a set of related cultures. The religious sensibility that shaped Christian attitudes toward nature, and of course a great many other things besides, wasn’t unique to Christianity in any sense; it had already emerged in the Mediterranean world long before Jesus of Nazareth was born, and only the fact that Christianity happened to come out on top in the bitter religious struggles of the late classical world and suppressed nearly all its rivals gave White’s condemnation as much plausibility as it had.

As I sit here at my desk, for instance, I’m looking at a copy of On the Nature of the Universe by Ocellus Lucanus, a philosophical treatise probably written in the second or third century before the Common Era. Ocellus, like many of the cutting-edge thinkers of his age, wanted to challenge the popular notion that the cosmos had a beginning and might therefore have an end. That was part of a broader agenda—one that’s left significant traces in many contemporary currents of thought—that dismissed everything that came into being and passed away again as illusion, and tried to find a reality outside of the realm of time and change.

That commitment led to strange convictions. The fourth chapter of Ocellus’ treatise, for example, is devoted to proving that human beings ought to have sex. If, as Ocellus argues, the cosmos is eternal, it needs to remain perpetually stocked with its full complement of living things, and therefore human beings ought to keep on reproducing themselves—as long as they don’t enjoy the process, that is. Back of this distinctly odd argument lies the emergence, then under way, of that strain of thought we now call puritanism: the conviction that biological pleasures are always suspect, and can be permitted only when the actions that bring them also have some morally justifiable purpose.

In the generations following Ocellus’ time, that sort of thinking became standard in intellectual circles across the Mediterranean world, in modes ranging from the reasonable to the arguably psychotic. For all their subsequent reputation, the Stoics were on the mellow end; most Stoic thinkers classed sex as “indifferent,” meaning that it had no moral character of its own and could be right or wrong depending on the circumstances surrounding it. (Stoics criticized adultery, not because it was sex, but because it was breaking a promise, which they found utterly abhorrent.) The spectrum ran all the way from there to religious cults that made castration a sacrament or considered reproduction the most horrible sin of all because it trapped more souls in the prison of the flesh.

That was the religious sensibility of cutting-edge thinkers all through the world in which Christianity emerged, and since the new religion inevitably drew most of its early converts from people who were unsatisfied by the robust life-affirming traditional faiths the people of that time had inherited from their far from puritanical ancestors, it’s hardly a surprise that Christian teachings and institutions ended up absorbing a substantial helping of the attitudes that arose out of the rising religious sensibility of the time. Every human cultural phenomenon is complex, contested, and polyvalent, and the religious landscape of the western world is no exception to this rule; religious attitudes toward sex in that setting ranged all the way from the Free Spirit movement in late medieval Europe, which indulged in orgies as a sign that its members had returned to Eden, all the way to the Skoptsii of early modern Russia, who castrated themselves as a shortcut to perfect purity. Still, the average fell further toward the puritanical side of the scale than even so ascetic a pagan movement as the Stoics found reasonable.

I’ve used sex as an example here, partly because people perk up their ears whenever it’s mentioned and partly because it’s a good barometer of attitudes toward the biological side of human existence, but the same point can be traced much more broadly. White pointed to the sacred groves, outdoor worship, and ecological taboos of classical Mediterranean pagan religion, and contrasted this with the relative lack of veneration for natural ecosystems in Christianity. It’s certainly possible to point to counterexamples, from St. Francis of Assisi through the Anglican natural theology of the Bridgewater Treatises to the impressive efforts currently being made by Patriarch Bartholomew of Constantinople to establish ecological consciousness throughout the Eastern Orthodox church; the fact remains that so far these have been the exception rather than the rule. Given the sensibility in which the Christian church came to maturity, it’s hard to see how things could have gone any other way.

As the theist religions of the west gave way to civil religions, in turn, the same patterns held. Once again, that wasn’t true in a monolithic sense, and the first great wave of civil religion to hit the western world—the nationalism of the 18th and 19th centuries—went the other way, embracing reverence for nature and the irrational dimensions of life as a counterpoise to the cosmopolitan rationalism of the age. That’s why the first verse of “America the Beautiful”—consider the title, to start with—is about the American land, not its human history or political pretensions. Still, the ease with which that thinking was dropped by the self-proclaimed patriots of today’s American pseudoconservatism shows how shallow its roots were in the collective consciousness of our civilization. Back in the Seventies, you would sometimes hear that first verse sung in a rather more edgy form:

O ugly now for poisoned skies, for pesticided grain,

For strip-mined mountains’ travesty above the asphalt plain,

America! America! Man shed his waste on thee,

And milled the pines for billboard signs from sea to oily sea.

Back in the day, that stung. Sing it now, when it’s even more true than it was then, and the most common response you’ll get is blustering about jobs and the onward march of progress. Other ages have seen the same process at work: it’s when the balancing act among traditional narratives, mystical experience, and religious sensibility finally fails, and the theist religions of a civilization’s childhood and youth give way to the civil religions of its maturity and decay, that the underlying logic of its religious sensibility gets pushed to the logical extreme, and appears in its starkest form. In our case, that’s biophobia: the pervasive fear and hatred of biological existence that forms the usually unmentioned foundation for so much of contemporary culture.

Does that seem too strong a claim to you, dear reader? I encourage you to consider your own attitudes toward your own biological life, that normal and healthy process of ripening toward mortality in which you’re engaged right now. Life in that sense is not a nice clean abstract existence It’s a wet and sloppy reality of blood, mucus, urine, feces, and other sticky substances, proceeding all the way from the mess in which each of us is born to the mess in which most of us will die. It’s about change, growth and decay, and death—especially about death. Death isn’t the opposite of life, any more than birth is; it’s the natural completion and fulfillment of the process of being alive, and it’s something that people in a great many other cultures have been able to meet calmly, even joyfully, as a matter of course. Our terror of death is a good measure of our terror of life.

It’s an equally good measure of the complexity of religious sensibilities that some of the most cogent critiques of modern biophobia come from Christian writers. I’m thinking here especially of C.S. Lewis, who devoted the best of his adult novels—the space trilogy that includes Out of the Silent Planet, Perelandra, and That Hideous Strength—to tracing out the implications of the religion of progress that was replacing Christianity in the Britain of his time. Into the mouths of the staff of the National Institute for Coordinated Experiments, the villains of the third book, Lewis put much of the twaddle about limitless progress being retailed by the scientists of his time. Why should we put up with having the earth infested with other living things? Why not make it a nice, clean, sterile planetary machine devoted entirely to the benefit of human beings—or, rather, that minority of human beings who are capable of rational cooperation in the grand cause of Man? Once we outgrow sentimental attachments to lower life forms, outdated quibbles about the moral treatment of other human beings, and suchlike pointless barriers to progress, nothing can stop our great leap outward to the stars!

You don’t hear the gospel of progress preached in quite so unrelenting a form very often these days, but the implications are still there. Consider the gospel of the Singularity currently being preached by Ray Kurzweil and his followers. I’ve commented before that Kurzweil’s prophecy is the fundamentalist Christian myth of the Rapture dolled up unconvincingly in science fiction drag, but there’s one significant difference. According to every version of Christian theology I know of, the god who will be directing the final extravaganza is motivated by compassion and has detailed personal experience of life in the wet and sticky sense discussed above, while the hyperintelligent supercomputers that fill the same role in Kurzweil’s mythology lack these job qualifications.

It’s thus not exactly encouraging that writers on the Singularity seem remarkably comfortable with the thought that these same supercomputers might decide to annihilate humanity instead of giving them the glorified robot bodies of the cyber-blessed in which Kurzweil puts his hope of salvation. It’s equally unencouraging when these same writers, or others of the same stripe, say that they don’t care if our species goes extinct so long as artificial intelligences of our making end up zooming across the cosmos. The same logic lies behind the insistence, quite common these days in certain circles, that our species can’t possibly remain “stuck on this rock”—the rock in question being the living Earth—and that somehow we can only thrive out there in the black and silent void.

I’m pretty sure that this is why the recent film Gravity has fielded such a flurry of nitpicking from science writers. What believers in progress hate about Gravity, I suggest, is not that it takes modest liberties with the details of space science—show me a science fiction film that doesn’t do so—but that it doesn’t romanticize space. It reminds its audiences that space isn’t the Atlantic Ocean, the Wild West, or any of the other models of terrestrial discovery and colonization that proponents of space travel have tried to map onto it. Space, not death, is the antithesis of life: empty, silent, cold, limitless, and as sterile as hard vacuum and hard radiation can make it. Watching Sandra Bullock struggling to get back to the only place in the cosmos where human beings actually belong is a sharp reminder of exactly what lies behind all that handwaving about “New Worlds for Man.”

Turn from the mythology of progress to the mythology of apocalypse, the Tweedledoom to Kurzweil’s Tweedledee, and you’re at least as likely to find biophobia, though these days it often takes an oddly sidelong form. Consider the passionate insistence, heard with great regularity on one end of the peak oil scene, that something or other is going to render life on Earth extinct sometime very soon—the usual date these days, now that 2012 has passed by without incident, is 2030. A while back, the favored cause of imminent extinction was runaway climate change; nuclear waste became popular after that, and most recently the death of the world’s oceans has become a common justification for the belief. None of these claims are backed by more than a tiny minority of scientific studies, but I can promise you that if you point this out, you will face angry accusations of pedaling “hopium.”

The people who spread these claims very often make much of their love for the Earth, but I have to say I find that insistence a bit disingenuous. Imagine, dear reader, that one of your loved ones—let’s call her Aunt Eartha, after one of my favorite jazz singers—has been told by a doctor that she has inoperable terminal cancer. Being aware that misdiagnosis is epidemic in today’s American medical industry, she seeks a second opinion, and you go with her to the hospital. A few hours later, the doctor comes to meet you in the waiting room, and tells you that he has good news: the first doctor has made a mistake, and there’s every chance Aunt Eartha still has many healthy years ahead of her.

Would you be likely to respond to this by becoming furious with the doctor and insisting that he was peddling hopium? If the doctor proceeded to show you the test results in detail and demonstrate why Aunt Eartha was in better shape than you feared, would you then go on to insist that if she wasn’t about to die of cancer, she was bound to die soon from diabetes, and when the tests didn’t bear this out either, would you start insisting that she must have severe heart disease? And if you did so, would the doctor perhaps be justified in wondering just how deep your professed love for Aunt Eartha actually went?

Mind you, those who talk about hopium have a point; the popular faith-based response to the crisis of our time that relies on the sacred words “I’m sure they’ll think of something” is a drug of sorts. Still, it bears remembering that the opposite of a bad idea is usually another bad idea, and hopium isn’t the only drug on the market just now; another is the equally deliberate and equally faith-based cultivation of despair. By analogy, we may as well call this “despairoin;” just as opium can be purified of the natural phytochemicals that make it hallucinatory and refined into heroin, hopium can be stripped of the hallucinatory fantasies of a bright new future, refined into despairoin, and peddled to addicts on the mean streets of the industrial world’s collective imagination.

I’ve suggested in the past that one of the things the paired myths of inevitable progress and inevitable apocalypse have in common is that both of them serve as excuses for inaction. Claim that progress is certain to save us all, or claim that some catastrophe or other is certain to doom us all, and either way you have a great justification for staying on the sofa and doing nothing. I’ve come to think, though, that the two mythologies share more in common than that. It’s true that both represent a refusal of what Joseph Campbell called the “call to adventure,” the still small voice summoning each of us to rise up in an age of crisis and decay to become the seedbearers of an age not yet born, but both mythologies also pretend to offer an escape from life, in the full, messy, intensely real sense I’ve suggested above.

Subscribe to:

Comments (Atom)